- Plasticity’s the hot new 3D modeling app – and it sure makes a mean NURBS model;

- NURBS used to dominate computer animation, and then subdivision surfaces happened. Is it time to give them another shot?

- How do we classify the models we make? Is it worth it?

Believe it or not, 3D modeling has its fair share of trends that have come and gone and come back again over the years. Very recently, there’s been a substantial volume of hype for Plasticity, a paid1 3D modeling tool that claims to be ‘CAD for artists’ (Computer-Aided Design):

An engineer might tell you that ‘CAD for artists’ is an oxymoron, but they’re not the ones who are raving about Plasticity – NURBS tools offered to artists by polygonal modeling applications are quite paltry compared to the engineer’s kit. Plasticity’s rise is truly a case of jumping aboard the hype train – just about every disembodied voice I’ve encountered that talks about it usually repeat the claims of Plasticity’s website with more or less words: a “streamlined workflow,” “the perfect tool for artists and product designers,” “It’ll replace this, that, and the other,” and so on.

Chief among the artists who are going to great lengths to show just what Plasticity can do is Will Vaughan, a 3D artist who’s worked on many projects from major animation studios for the past 20-25 years. He – or, should that be Floyd, Oscar the Grouch’s terminally online cousin – is a Plasticity juggernaut, releasing a deluge of quick, Shorts-worthy Plasticity tutorials at a concentrated, consistent rate since it launched:

Vaughan’s a font of knowledge when it comes to 3D modeling – his 2011 book, Digital Modeling, spares no nook nor cranny introducing the concepts, techniques, and technologies that make up just about every industry that uses 3D software.

The idea of designing a CAD application with an interface that creates a more pleasant user experience for creatives is not new. MoI 3D predates Plasticity by at least 17 years, and can be controlled using a pen tablet:

https://web.archive.org/web/20060810145302/https://moi3d.com/

Hype is a tricky substance to handle (don’t shake it too much – it’s like nitroglycerin). Something that didn’t exist turns up, then we get to interact with it, be the direct cause of some task that may be familiar to us in a new way, and that’s cool. At some point, hype goes supernova, before it begins to crystalise, until one day we wake up and temporarily forget what it was like working before that thing came along. Unlike other creative mediums subject to criticism – books, wine, food, film, television, video games – after the dust has settled, nine times out of ten, we seem to give software the slip.

https://www.wired.com/story/software-criticism/

There’s not been much room to evaluate Plasticity’s potential as an essential tool for the 3D artist’s utility belt. For me, the long and short of it is: it fills a niche, it’s not a replacement for anything, and there’s always more than one solution to any modeling problem. Often is the case that when a new solution is in town, every problem becomes a nail for it to hammer.

Plasticity certainly tries to make a point of being different. The user experience is one that can only be described as ‘Blender, but NURBS.’ Blender has, from day dot, always stood apart from the other major polygonal modeling applications used in major studio projects – 3DS Max, Maya, Cinema4D, etc. – by being different. Sure, you can export models in all the widely-used file formats, and as long as you haven’t used any features that aren’t standardised and specific to different applications (creasing and n-gons come to mind), it’ll play nice. When I say it’s different, I mean that Blender and its wider community are a case study in schismogenesis in action2 – “See that feature? We like that. It’s a likeable feature. We want that in Blender – but we’re gonna do it differently.” The GUI is different. The menus are different. The terminology is different (‘faces’ over ‘polygons,’ and as far as anyone’s concerned, what the software calls ‘normals’ might as well be a bunch of faux amis). The Blender user experience is, on the whole, several orders of different compared to other 3D applications. It’s essentially to other 3D applications what GIMP is to Photoshop – there’s a Photoshop way of doing, and then you have the GIMP way.3 For as little as that reason alone, some industry professionals won’t touch it. If you’re a Blender user like me, you may roll your eyes, and brush it off as some strange elitism, but switching costs are a very real phenomenon, both for the individual artist and the employer they work for.

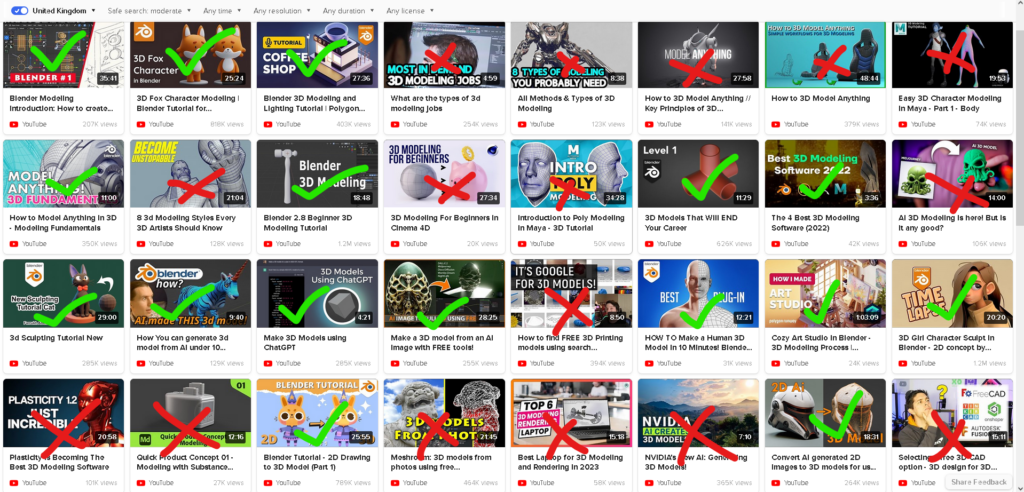

Blender being free makes it the most accessible 3D application on the market ipso facto. Perhaps as a result of this, the volume of 3D art content on YouTube that has been made using Blender – namely, tutorials – completely dwarfs all other competitors. Like most Blenderheads, Andrew ‘Blender Guru’ Price’s donut tutorial was my rite of passage to understanding the software:

While this isn’t a strictly rigorous scientific experiment, I searched for ‘3D modeling’ on DuckDuckGo and looked at the first 32 results. Half of them were a video that talked about or used Blender in some way, and the other half was a mixture of other popular (but paid) 3D apps:

Regardless of the software you use, free YouTube tutorials help people to go far. But there’s one topic in polygonal 3D modeling that gets talked about on YouTube constantly, in a way that’s never sat right with me. I don’t know for sure if it’s driving all the hype, but I’d be willing to make a bet. I’ll get onto that topic in a moment. First, a bit of history…

NURBS were an extension of the B-spline, which itself was a generalisation of the independent works of Pierre Bézier and Paul de Casteljau, respective engineer and mathematician for Renault and Citroen. Their invention is often credited to Dr. Ken Versprille, although the initial research and development that led to the B-spline can be attributed to a group of graduate students of whom Versprille was a member:

https://blogs.sw.siemens.com/solidedge/Just-how-did-NURBS-come-to-be-by-Dr-Ken-Versprille/

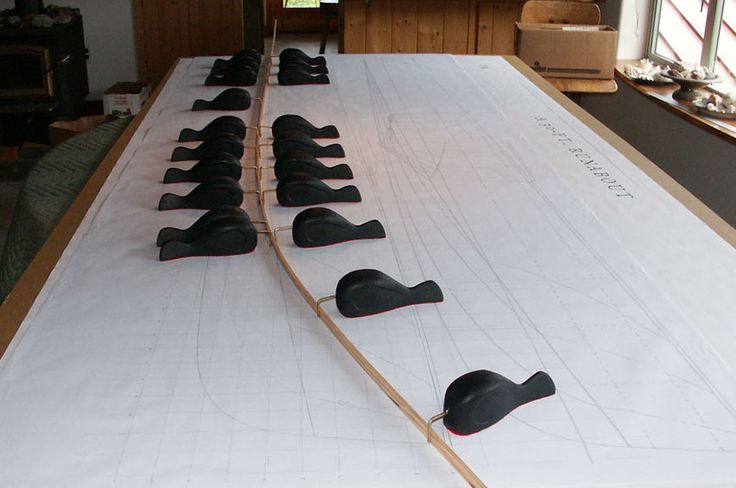

NURBS are so commonly used in engineering tasks because they can reliably express curvature with mathematical precision. They are one of many different varieties of spline, the digital designer’s equivalent of historical tools used by shipbuilders to accurately draw the contours of their designs. These bonafide fossils of engineering are what give splines their name. They’re also, in essence, polynomial functions, or, as they’re better known by school pupils, the Big Bad of GCSE/Higher Maths. Ubiquitous in design and manufacturing, most manufactured objects in front of you will have been prototyped and designed using a CAD application that supports NURBS – Autodesk Inventor, Fusion360, Rhino, FreeCAD, take your pick.4

Animating a NURBS model suuuuuucks. It’s technically possible, but half the time you’re fighting the model to not break at the seams, and half the time you wonder if it’s really worth it. To animate any 3D object means to deform its original shape, not unlike the principles of animation in two dimensions. Characters modeled with NURBS are essentially a collection of patches that join up together. There’s a limit to how much you can deform these characters before they literally start breaking at these seams, and you have to keep twiddling and twiddling until they don’t. As I say – you can achieve expressive animations with NURBS, it’s just a pain in the ass getting there. The Mask (1994) is both a testament to how far NURBS could be pushed, and how feasible it was to seamlessly integrate live action and digital VFX:

One does not simply emulate Tex Avery-style slapstick in NURBS for a feature-length film.

Where engineers tend to rely on NURBS for mathematical precision, 3D artists nowadays tend to use polygons to build their models. Polygonal models are essentially to NURBS what pixels are to vector graphics. They’re made up of vertices (points in three-dimensional space), that connect up to form edges, which are filled in to make polygons. Three vertices make three edges, which make one triangle. Four vertices make a quad (or a square; or, under the hood, just two triangles). A low-polygonal model means less vertices for the CPU to handle, and thus it’s faster to render in real time, but also much more practical for the artist to edit. This, however, creates the opposite problem of NURBS – we can’t describe perfectly smooth surfaces with polygons, and the lower the resolution, the less model there is that can be manipulated to create poses, facial expressions, fluid animation, and so on. The simple solution might be to make higher-resolution models… Except, making a high-resolution model by hand is extremely cumbersome, and animating it even more so. Your workstation, trying to keep up with hundreds of thousands, even millions of vertices, starts to grind to a halt.6

Animating a polygonal model suuuuuucked.

For about five minutes.

In November of 1997, patrons of the Laemmle Theatres cinema in Los Angeles were given an invitation to ‘get a glimpse of the future of animation’, courtesy of Pixar Animation Studios:

https://web.archive.org/web/20140419154851/https://www.thefreelibrary.com/Question%3A+Where+Can+You+Get+a+Glimpse+of+the+Future+of+Animation%3F%3B…-a020009689

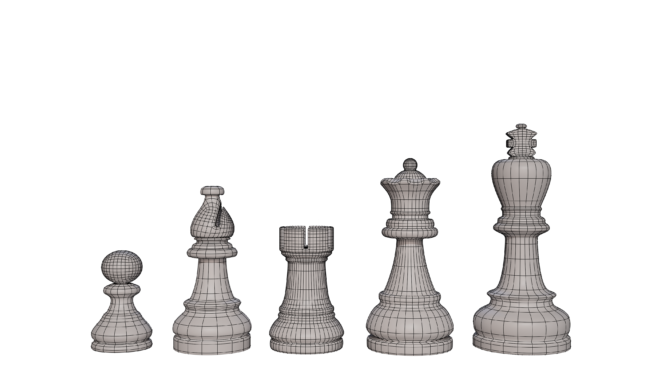

That ‘glimpse’ turned out to be Pixar’s latest short film, Geri’s Game. For audiences, it was a funny short film about an old man in a park playing chess against his catankerous doppelganger.7 For artists, this short was a proof-of-concept for subdivision surfaces in character and cloth animation – a technique for generating smooth surfaces that had existed since the 1970s, but had only become feasible in preceding years due to advancements in computing power.

https://archive.org/details/geris-game-1997-restored

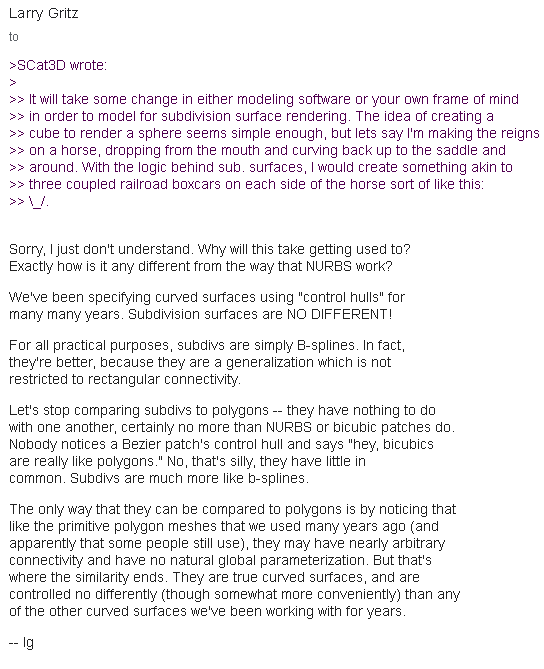

Subdivision surfaces both are and aren’t polygonal. Larry Gritz, one of the developers behind the rendering engine Pixar used to render A Bug’s Life, among other films, shared his thoughts in a Usenet post from 1998:

https://groups.google.com/g/comp.graphics.rendering.renderman/c/7F2UcrHla6A/m/YgAFMgVVXJIJ‘

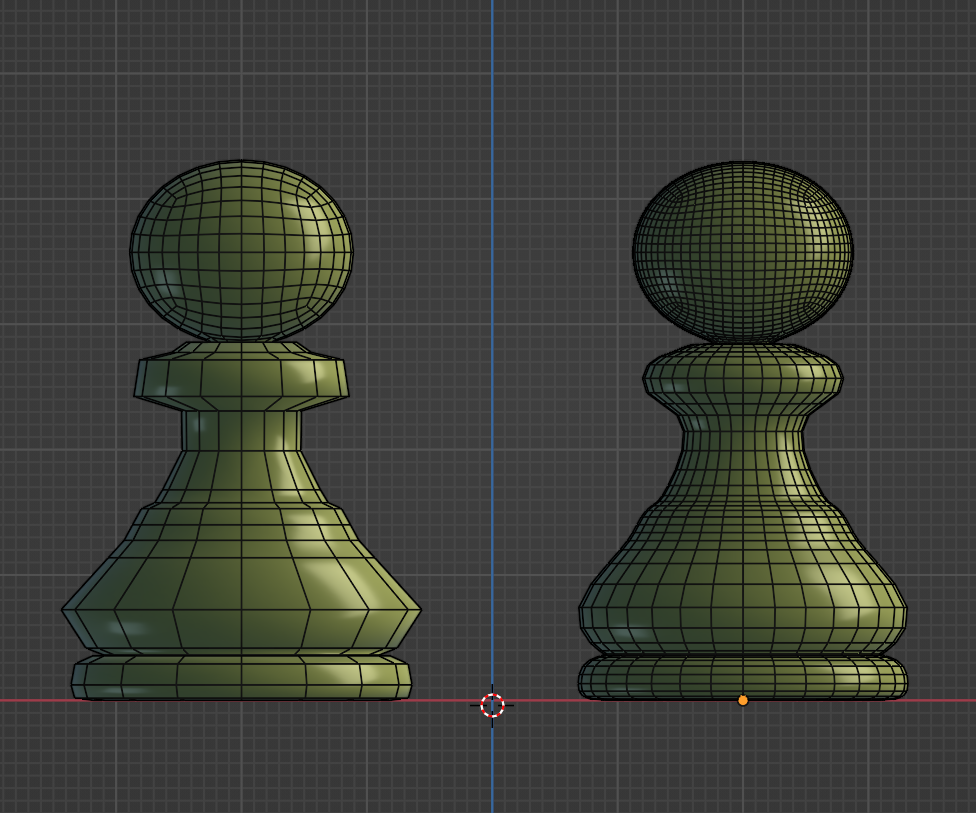

An artist building a subdivision surface model creates the control cage (or hull, in Gritz’s post). For our purpose of understanding, control cages are polygonal. They are the low-resolution version of a model that can be subdivided at render time to produce a smoother, higher-resolution result – the limit surface.

Then again, control cages aren’t polygonal. The vertices that make up control cages are the digital analog to the ducks described earlier. Move them, and you alter the spans that connect them – and affect the final limit surface. With NURBS, this rough outline of the final surface is refined infinitely. With subdivision surfaces, we choose how much the limit surface refines the control cage.

https://aliasworkbench.com/theoryBuilders/TB1_nurbs1.htm

Who better to explain how we go from the Pawn on the left to the Pawn on the right than Tony DeRose, one of the authors of a seminal 1998 paper8 describing the research they had done for Geri’s Game.

In summary, one level of subdivision equals four times as many vertices, positioned by smoothing and averaging the original vertices’ positions through bicubic interpolation. Basically: the limit surface looks smoother than before, but it could be even smoother.

https://en.wikipedia.org/wiki/Bicubic_interpolation

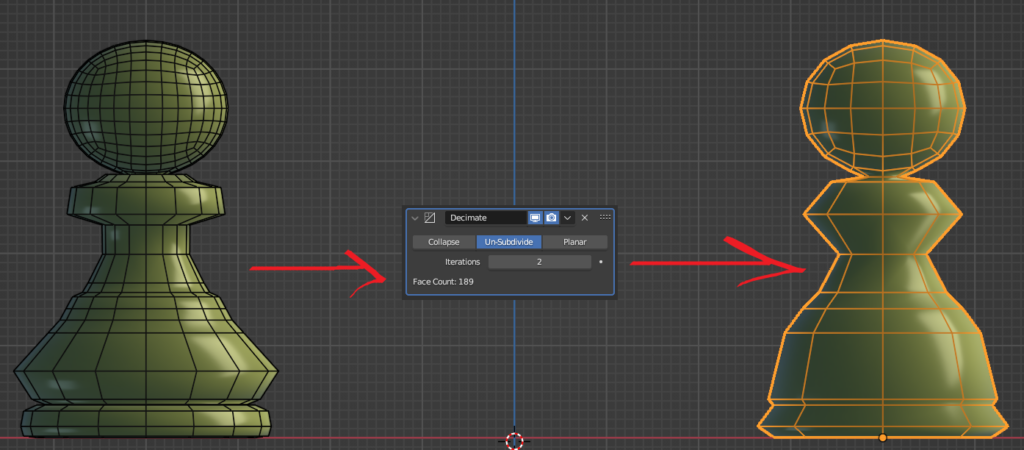

A good subdivison surface model should be flexible and robust. It’s like a yoghurt culture – just as all future batches can be derived from a single starter culture, so a subdivision surface model, if it is robust, can be adapted for many different mediums. A cinematic production can afford to render models with a resolution in the millions of vertices. Not so for game engines, that need to render everything on screen in real time, and at a consistent frame rate. Instead, the subdivision cage’s resolution may be algorithmically reduced. In Blender, this is done using the Decimate modifier.

Neither Pixar nor Geri’s Game made the switch from NURBS to SubD in other studios an overnight one. Every major 3D software vendor had to come up with their own implementation, until 2013, when Pixar went open-source with their OpenSubdiv project. It quickly became the de facto standard implementation for subdivision surfaces. If it uses OpenSubdiv, then artists can hop from software to software and have their control cages subdivide in exactly the same way.

You wanna know who really killed NURBS? Jar Jar Binks. I’m dead serious. According to Delaney King, another industry veteran9, Jar Jar is one of the last characters to be fully modeled with NURBS by Industrial Light and Magic (who also worked on the VFX for The Mask, among other major blockbuster films).

https://twitter.com/delaneykingrox/status/1122727603532468224

Jar Jar’s reputation precedes him. He repulses me, physically; his performance has a particular combination of kitsch and insipidness that sets my teeth on edge.10 You don’t have to ask a Star Wars fan for their opinion of him – they’ll be quite happy to tell you.11 He did not break any new ground in CG whatsoever. Really, he is the pain of using NURBS taken form. The pain poured into restitching this flap of skin on nearly every frame was real. Even if it’s not strictly true that he was the definitive coup de grâce, he’s a much more satisfying bookend to the domination of NURBS in computer animation.

After The Phantom Menace, use of NURBS at ILM steadily declined, and subdivision surfaces emerged as best practice. I always like to think of that phrase in the way Steve Poole put it in his 2013 book, Who Touched Base in my Thought Shower?: That thing wot another company does that we want to do, too, but rather than just blatantly plagiarise that thing, we’ll make a couple changes and then praise, praise, PRAISE the company we nick from like it’s their obituary.

For nearly 25 years, subdivision surfaces have been the norm. Folks who want to bring their creations to life in 3D inevitably look to the web to learn how to model – what you should/shouldn’t do, how to make this, or what’s the best way of doing that. Predating the pedagogical revolution brought about by YouTube is a debate among 3D artists that still continues to this day. As Will Vaughan writes in Digital Modeling, anyone who studies 3D modeling long enough will learn that artists develop their own personal taxonomy for what defines a hard surface model, an organic model, or a semi-organic model. How they know what’s what – their epistemology – is how they might define themselves in the industry, to make it easier for employers to find them. He defines three rough categories for these schools of thought:

- Production-Driven: Models are classified based on their purpose in a production. Static objects that won’t move are hard surfaces, where objects that will deform in some way are organic.

- Attribute-Driven: The object’s appearance is its classification. Objects with tight edges and simpler shapes might be hard surfaces, like old-school robots (think R.O.B., or K-9 from Doctor Who). Objects with lots of curvature and complex shapes – like humans – are organic. Semi-organic comprises everything that doesn’t fit these two categories – cars, firearms, helmets, etc..

- Construction-Driven: The techniques the artist uses to create the model, along with its topology, are what classifies it. If you digitally sculpt an object in Z-brush, that’s an organic model. If you cut and join simple shapes together using Boolean operators, and add in bevels to soften the edges, that’s a hard surface model.

These categories are useful to keep in mind when watching modeling videos on YouTube. You can understand where the artist is coming from in their approach. Most creators I’ve come across on Youtube are a hybrid of each category. If they use Blender, they’ll make use of paid addons like HardOps and Boxcutter to make their hard-surface modeling workflow ‘more efficient.’

Ultimately, I think the debate is a white elephant: an ostensibly valuable thought exercise, but its practicality is a tad exaggerated. On YouTube, it’s more of an advertisement: come and learn hard surface modeling, the BEST way of making models QUICKLY, and why you don’t need to worry about this and that, and by the way, have you checked out our course yet? It’s 30% off right now! Although, this is more to do with changes to the platform over the years, rather than YouTubers themselves.

Remember when YouTube’s slogan was, “Broadcast Yourself?” It still is, in spirit. Keep in mind that YouTube back in the day was the vlogging website. You may take YouTube out of vlogging, but you can’t take vlogging out of YouTube.

Every original YouTube upload that intends to inform its audience, but cannot be traced to a verifiable source of academic expertise, is subject to the golden rules of blogging – no exceptions. Some of those golden rules are, in my opinion:

- You don’t have to be an expert – you believe other people’s experiences count for something;

- You don’t have to be entirely serious – you don’t pull rank, thus no-one is pressed to listen to what you’ve got to say;

- What you say is tacitly protected by the fact that yours is not the only perspective – you’re allowed to be wrong, and your audience knows this.

https://semaphoreandcairn.com/2018/02/16/blogging-in-an-expert-societ

As consumers, we’ve got to be careful that we don’t reify any claims made as solid fact. Some YouTubers may try to play fast and loose with these rules more than others12. With regards to the typical hard surface modeling advice that gets thrown around, there’s nothing that can be proved to be demonstrably false. But the more I watch, the more I see the same advice, and the more I wonder where I might find other perspectives, or if all of hard surface pedagogy is really just five tutorials, each filled with concepts from the other four.13

Here’s the thing: hard surface modelers on YouTube hate hard surface modeling with polygons. The objects they make can quickly be made with Boolean operations. Because NURBS surfaces are mathematical expressions, booling them together through unions, differences, and intersections is quick and painless. But booling two polygonal models suuuuuucks. If you want a ‘good’ Boolean cut, both objects have to have equal resolution in the areas you’re cutting from. Even if you do cut it correctly, you may have to go in and manually move and merge vertices if you’re going to subdivide it later.

Booleans make models at speed, but it’s a Faustian bargain – unless you manually fix the model’s topology to be all quads again, it will only ever be usable as a static object, and cannot deform without breaking. Making it in NURBS and then converting the model to polygons is no good, either – no such algorithm exists to convert a NURBS surface to a control cage that’ll take whatever deformation you throw at it. If you want the model to deform, you have to use that NURBS conversion as a base and retopologise – which means building the whole model again, quad by quad14. When it comes to professional studio productions, the clock is always ticking, and so often what matters above all else is being able to move fast and make things. If you’re not making new things, you’re not moving fast enough. Retopologising a static background object for future use is relegated to a waste of time.

Booleans are prototyping tools – they put an artist’s ideas on the screen, but those models ought to be a means to an end. Artists want prototypes that look good and can be hashed out post-haste, but they don’t want to spend hours learning an interface that was not designed with them in mind. Folks who have yet to become professional artists want to hash out models post-haste because prevailing social forces tell us that faster workflow = more models = more hustling = bigger portfolio = more chances of landing a job, or at least being noticed.15

Blender being the most accessible 3D application means that, in theory, if you take a pinch of inspiration from it, assuming its popularity corollary to its accessibility and UX, you cast the widest net. Of course Plasticity is proving popular. It will probably continue to grow steadily for a good couple of years at least; what happens when it can’t attract new customers and treat its early investors with new features remains to be seen.

If you’re a hard surface modeler working in Blender, you’ve every right to feel like Blender’s default tools bog you down. All the more power to you if you decide to buy Plasticity. What I shall say, however, is that the search for the right tool for the right job need not be so far – if you’re willing to stick to polygons, two of Blender’s pre-installed addons go a very long way:

- F2: automatically fills in polygons for you, depending on what vertex/edges you have highlighted, and where your cursor is on the screen;

- LoopTools: a collection of multi-faceted tools that can perform common tasks using highlighted loops.

And if you’re looking for a different perspective on making good 3D models, Ian McGlasham’s YouTube channel is a must watch. Every video is a gold mine of information, full to the brim with modeling knowledge. You won’t take everything in on your first time around, but come back to the video at a later date, and you’ll definitely go, ‘Oh, that’s what he was talking about.’

https://youtube.com/channel/UClUFZXT27Q7n4L84xAoWjBQ

Rather than focus on model taxonomies first, he focuses on developing concepts about what makes a good subdivision surface model, mainly focusing on what one needs to know to create a ‘framework mesh.’ He argues that it’s the ultimate goal of any modeling task, and once you’ve made it, you’ve got a base model that can be adapted for any purpose – production-driven, construction-driven, or attribute-driven:

https://www.hardvertex.com/theframeworkmesh

Hard surfaces? Like NURBS models, consider control cages as being a collection of local geometry areas, contained within boundaries, and connected by them, too:

https://www.hardvertex.com/boundaryanatomy

https://www.hardvertex.com/localgeometry

Hard surfaces with Booleans? There are other ways to union or intersect geometry that, while they may take more steps in the beginning, save time you’d otherwise spend merging and cleaning up rogue vertices:

https://www.hardvertex.com/hardsurfacemodelling

https://www.hardvertex.com/joiningcylinders

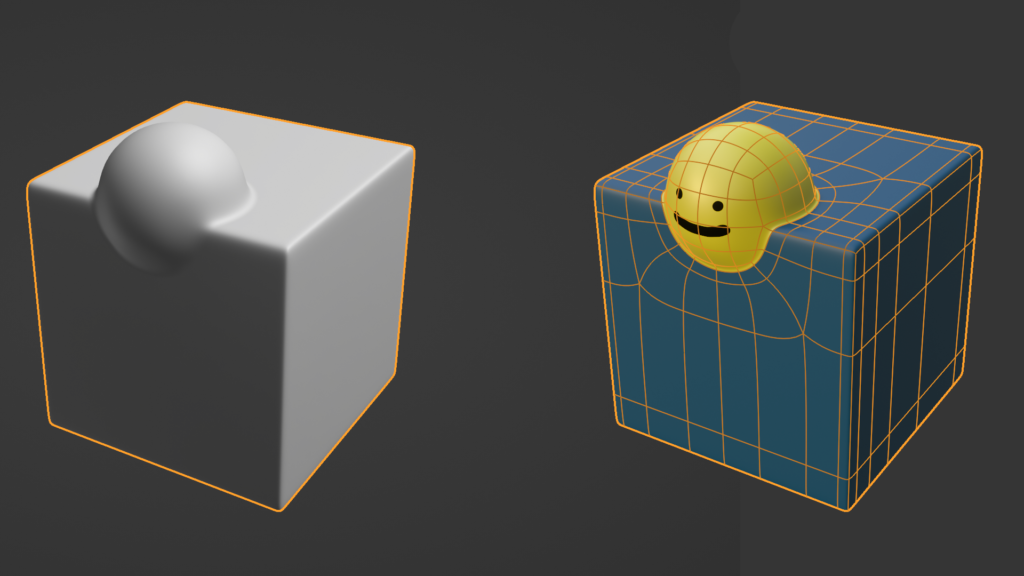

One exceptional use case for combining Booleans with subdivision surfaces is when a sphere is used as the cutting object. I think this might be because spheres are the only primitive shape to lack corners – every point on the limit surface is a point of curvature. Thus, the resolution of the sphere will drive the rest of the geometry in that area – you can’t add or remove loops from a sphere without impacting its curvature, and making bumps, ridges, and shading artifacts.

https://www.hardvertex.com/booleansphere

In the original video, a sphere is cut out of the shape. Unnecessary faces are deleted as we work on a ‘patch’ to be joined to the final cube model – that is, the sphere cutout, and the loops that we’ve inset surrounding the cutout. Following the steps in the video, I did the opposite, and unioned the sphere and cube instead.

McGlasham’s method of controlling curvature in a subdivision model is not to model it in manually – as one would with bevels and chamfers – but through placing control loops near the edges. Defining the sharpness of corners this way means that the model isn’t tied to a feature that’s not standardised. Although Pixar has used a creasing technique for many of its films, it’s not standardised… yet.

https://www.fxguide.com/fxfeatured/pixars-opensubdiv-v2-a-detailed-look

Not every model in a production need be a subdivision surface – mainly the ones you’re going to see up close and the most often (‘hero’ models, they’re called). Often it’s the case that background elements like buildings or mountain ranges may just be a primitive shape or geometry generated from photogrammetry. The more framework meshes one makes, however, the more time one potentially saves in the long run. Why remodel an asset for a remake being made on more powerful hardware when a framework mesh can give you a higher-resolution version of said asset?

The world of 3D artistry is so vast. Modeling’s only a part of it, and this post only a microscopic slice of what I’ve experienced. I’m not a professional16, and I have the privilege of learning and modeling at my own pace, so I don’t have the experience of working in a studio. But of the hobbies I’ve picked up in the last year or so, it is definitely the one that has gotten me to put my Critical Thinking hat on the most. YouTube’s made us all autodidacts to some degree. Never has it been more apparent that all of us are learning, all of the time, and everyone wants to show off what they’ve learned; the asset packs and paid courses come later. I don’t think Plasticity will change the hard surface vs. organic vs. semi-organic debate, and I won’t find myself using it, but it does offer an expressive introduction to modeling with NURBS, and thus another approach to solving modeling problems. For that, it’s got its merits.

- Isn’t it a rare treat nowadays that when you buy a program, you’re given a perpetual license – pay once, lease forever? Of course, it’s not as rare a treat as “pay once, own forever, and by the way, here’s the source code so you can tweak it and release your own version.” ↩︎

- Referring to David Graeber and David Wengrow’s use of the the term in The Dawn of Everything: https://www.newyorker.com/magazine/2021/11/08/early-civilizations-had-it-all-figured-out-the-dawn-of-everything ↩︎

- Which, of course, all starts when you install the two programs.

>using linux in front of class mates

>teacher says “Ok students, now open photoshop”

>start furiously typing away at terminal to install Wine

>Errors out the ass

>Everyone else has already started their classwork

>I start to sweat

>Install GIMP

”Umm…what the fuck is THAT anon?” a girl next to me asks

>I tell her its GIMP and can do everything that photoshop does and IT’S FREE!

>“Ok class, now use the shape to to draw a circle!” the teacher says

>I fucking break down and cry and run out of the class

>I get beat up in the parking lot after school ↩︎ - Although, don’t pick at random – you’ll find that Autodesk has multiple fingers in every pie when it comes to 3D software. ↩︎

- Image credits go to https://www.pinterest.se/pin/lofting-ducks-spline-weights-drafting-whales-batten-weights-i-used-to-do-this-work-lofting-back-in-the-60s-at-douglas-and-northrop-aircradt–562527809677424125/ ↩︎

- This isn’t to say that low-poly character models can’t be expressive, just that poly count can limit what you can do. Depends on the project – plenty of games use textures and normal maps for character facial expressions. If you’re making a CG Lego stop-motion animation, changing a character’s facial expression is quite literally as simple as a texture swap. ↩︎

- Himself, of course. ↩︎

- DeRose, T., Kass, M. and Truong, T. (2023a) ‘Subdivision surfaces in character animation’, Seminal Graphics Papers: Pushing the Boundaries, Volume 2, pp. 801–810. doi:10.1145/3596711.3596795. Available at: https://dl.acm.org/doi/10.1145/3596711.3596795 ↩︎

- Has worked on many AAA games – Dragon Age: Origins, Civilization IV, Unreal Tournament – and as a shader artist on Where the Wild Things Are: https://www.delaneyking.com/folio ↩︎

- I’m not talking about Ahmed Best’s performance, I’m talking about Jar Jar’s performance. ↩︎

- For the record, I’ve only ever seen The Phantom Menace. If Ian Roescher’s Shakespeare versions count, Verily, a New Hope. ↩︎

- Pay no attention to the cryptobro who covers his ass with, “I am not a financial advisor…” ↩︎

- https://twitter.com/tveastman/status/1069674780826071040 ↩︎

- A subdivision cage can contain triangles and n-gons (polygons with more than 4 vertices), but in the case of the former, they’re best placed in areas that won’t be seen much, or on flat surfaces. Likewise for the latter, but not all 3D applications support n-gons. If you’re working in a pipeline, it may be best not to take that chance. ↩︎

- I take umbrage at this belief not because I think you shouldn’t keep working on things regularly, but because it’s the sort of thing thrust upon us workers that at best prepares us for crunch, and at worst acts as a driver for crunch. To me it’s a complex, nuanced topic that deserves its own post. ↩︎

- See, there it is again. The Shield of Iamnota. ↩︎